Using Generative AI to Illustrate a Short Story

I’m so excited to announce the re-release of A Midnight Clear, my Christmas short story, published using Adobe Express, just in time for the holidays!

A Midnight Clear – A Story of Hope

In the past, I have illustrated my Christmas story with images from Adobe Stock and composites created in Photoshop; I've also converted it to an audio podcast, complete with music and sound effects. And I've shared my process for creating both versions through other articles on Behance.

A Midnight Clear – A Story of Hope

In the past, I have illustrated my Christmas story with images from Adobe Stock and composites created in Photoshop; I've also converted it to an audio podcast, complete with music and sound effects. And I've shared my process for creating both versions through other articles on Behance.

This year, I've turned to Adobe Firefly to generate most of the storytelling imagery, like the ones you see below.

I also took the time to remix the podcast version using Audition, Premiere Pro and After Effects. I think it sounds (and looks) awesome.

I'm thrilled to not only share this re-imagining of a story I wrote nearly 40 years ago - using a typewriter, no less - but also to relate my experience in using Generative AI as my creative assistant.

As I've done in the past, I want to break down my process for you. Much like other recent projects and articles I've written on Generative AI (specifically, Adobe Firefly), I will share how this new technology fits into my creative workflow, aiding me in making my dream a reality, not replacing my creativity. But first, a word or two on this new "assistant."

AI as the Creative Assistant

I like to think of Gen AI as a "helper," not as the "solution," to my creative goals. I've voiced this same concept on other projects here on Behance. If the AI is doing everything and you are just blindly accepting the output, while you may be creating artwork, you are not creating art. Using Generative AI as part of the process, to enhance your true art or craft, is different. There's a synergistic effect, somewhat akin to collaborating with others (although not to the same depth human collaboration would produce).

To use Generative AI creatively and intelligently means that you are doing more than just accepting the first image supplied by the AI. And sometimes, it means you aren't accepting anything at all. It’s about knowing when to apply this technology and when to rely on more traditional methods. It’s about knowing when a Firefly-generated image is “right” and “ready” to help tell your story.

Prompt: concept art illustrating the creative process with the help of a machine, Art, cinematic, acrylic paint

The Process

In general terms, below are my considerations and steps. We'll look at specific examples pulled from my story and tied to this guidance, shortly.

1. Determine which AI service/model is best for your needs.

2. Understand your reasons for using Generative AI.

3. Understand the technology and how to use it.

4. Have a clear - but flexible - idea of what you are trying to achieve.

5. Create an outline/storyboard identifying where Gen AI will be beneficial.

6. Know when Gen AI is suitable.

7. See the potential of generated assets.

Examples

Determine which AI service/model is best for your needs

From ethical and legal perspectives, not all Generative AI tools were created equal. Adobe Firefly was designed from the ground-up to be safe for commercial use, not just personal use. Adobe is so sure of this fact, the company even offers indemnity coverage for commercial customers. While other tools are moving in this direction, Adobe Firefly was the first. More details on the FAQ.

Adobe Firefly is only trained on public domain imagery, content that is no longer under copyright, and the collection of more than 300 million professionally-created images found on Adobe Stock.

To my knowledge, Adobe was the first company to offer a compensation model for the creatives whose work is used in training Firefly.

Understand your reasons for using Generative AI

Are you trying to save time? Are you trying to keep costs down in production? Are you looking for inspiration? Are you trying to achieve results you don't have the technical/creative skills to achieve on your own? Are you just kicking the tires of Gen AI? These are all perfectly good reasons to use generative AI.

Understand the technology and how to use it

Be prepared to experiment with the interface. Get comfortable with the tool. Do some reading, watch some videos. And remember that playing is half the fun. Firefly makes the generation process a distinctly visual one, incorporating a range of tools to help you generate your image without getting lost in code or markup. You can even use an existing image to assist in matching the style for your output. This was very handy for me when I wanted to match a particular composite I created that for my original story. I uploaded the composite as my style reference, added my prompt and got what I was hoping for within two generations.

Firefly Interface

LEFT: Original composite. RIGHT: Firefly generated image, using text prompt and reference image

Have a clear - but flexible - idea of what you are trying to achieve

In other words, know your story or message and be prepared for happy accidents created by the AI. In my case, I knew this story very well. I knew the message and mood I was going for. I also had a lot of text to work with and many opportunities to enhance or embellish the story visually. Your situation may be different; you might only have a couple paragraphs, or even just a few words of copy. It's critical you understand the overarching message you are trying to convey, and also open to surprises. Because Generative AI is not "thinking," or "choosing," in the human senses of the words, it could totally misinterpret your prompt or your goals.

Several times, I used copy from my story as the text prompt. I learned that - out of context - a block of text may not give me the results I wanted. Paragraphs in a story are linked to each other, It's often crucial to understand and remember the paragraph - or paragraphs - before to appreciate the meaning of the paragraph you are reading. If you simply give AI an excerpt from a story, you COULD get some cool results, or they could be off-base. So, I would often tweak the prompt to be more descriptive.

Top two images generated using the older Firefly Version 1 algorithm. Bottom two generated with Firefly 2.

In the example above, the prompt I began with was a direct quote from my story:

"It was a night symbolic of December in the north. Quiet, and the air so cold you almost thought you could shatter it like a pane of glass. Of the four other cabins on the lake, mine was the only one with a welcoming light shining in the window."

Over time, I chose another quote and added to it something more descriptive of the scene, rather than the mood:

"a clear winter night, the moon casts a serene glow across the fresh blanket of snow. Giant spruce, whispering to me in the breeze, carved long shadows in the snow. The frozen sheet of the lake was also covered, but I could still make out the dip between the shore and water. beautiful log cabin sits near the lake, smoke rising from the chimney"

"It was a night symbolic of December in the north. Quiet, and the air so cold you almost thought you could shatter it like a pane of glass. Of the four other cabins on the lake, mine was the only one with a welcoming light shining in the window."

Over time, I chose another quote and added to it something more descriptive of the scene, rather than the mood:

"a clear winter night, the moon casts a serene glow across the fresh blanket of snow. Giant spruce, whispering to me in the breeze, carved long shadows in the snow. The frozen sheet of the lake was also covered, but I could still make out the dip between the shore and water. beautiful log cabin sits near the lake, smoke rising from the chimney"

Note: When it was first in beta, I began testing Firefly with my story, and I can tell you, as I write this article, that Firefly has evolved significantly since it was first made available. The quality and realism are much improved, and it seems to better understand natural language, although it still seems to struggle with numerical values, like "four cabins."

Create an outline/storyboard identifying where Gen AI will be beneficial.

In my project, the outline was the actual story, and the original artwork was a good storyboard; I had already identified the written elements that would most benefit from illustration.

Firefly also allows me to create favorites, which results in a bit of a moodboard, where I can view variations I liked and also see the exact prompts I used to get the resulting images.

Note that Firefly Favorites is cookie-based, tied to the browser you're working in. So, if I add those favorites while in Google Chrome, that's the only browser in which I will see those; If I start working in Safari, or Microsoft Edge, I will NOT see the favorites I added in Chrome.

Adding Firefly variations to favities means you also have quick access to the original text prompt.

Know when Gen AI is suitable

This should be an ongoing - or overriding - consideration. You may not be able to identify those situations until you are faced with them. For example, if you find yourself creating a significant number of generated variations and are still no closer to your vision, it's time to turn to other resources, such as stock assets, original art or composited work.

Old man in the woods

Try as I might, I could not get the exact look and feel of this composite image, created with two Adobe Stock assets, and then enhanced with color and lighting treatments. This is one of the few illustrations I did not replace.

See the potential of generated assets

By this I mean, take the time to discern when a generated asset is almost right, but could be perfect with just a couple tweaks in Photoshop or Adobe Express. More often than not, any image you generate could benefit from some additional forms of editing such as cropping, additional generative fill, clone-stamping, color treatments, etc.. Take the time to really study your generated images. Be critical. You - not the technology - are the differentiating element when it comes to AI.

One nearly universal edit I made was to color grade the majority of the images, as the story takes place at night, and - even when prompted - Firefly didn't always give the cool tone I was looking for. Again, this is a subjective choice, and a simple example of why human creativity and involvement is so important when generating graphics using AI.

Below, I've singled out a few examples of images that were nearly there, but not quite. Images on the left are the ones generated by Firefly. Versions on the right were tweaked in a variety of ways, which I will share.

Keep note that even with this small sampling, my human input and inspiration were needed to realize my final vision. This is not something Firefly - or any other Generative AI tool - can do without your decision-making and interaction.

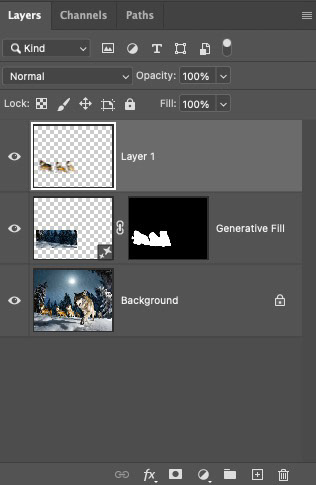

Wolf Howl

I loved the original generation of this scene. I was frankly, blown away by it. But, I wanted it to use it with a special Adobe Express web layout feature called Window. The Window function fills the entire web page from left to right with the image, while only showing a segment of the scene as you scroll up or down. If, in Express, this original image was scaled to fit the entire window width, the wolf would only be partially in view at any given time. As I wanted to have the wolf in full view within the window, I needed a much wider image than Firefly could produce. So, I downloaded the file as a PNG (to retain quality) and then first ran it through Topaz Gigapixel.

Yes, I know - GASP!! - but the truth is Topaz does a better job than Camera Raw's Super Resolution when upsizing illustrative graphics.

After upsizing, I brought the image into Photoshop and used the Crop tool and Generative Expand to turn the image into more of a panoramic scene. I extended the canvas about 3 or 4 times at 300 pixel wide increments, so that Firefly wasn't having to spread itself too thin (currently, Generative Expand/Fill is limited to 1024-pixel samplings at the long edge). The fact this image was much more illustrative than photographic gave me a little leeway in terms of resolution.

Note: In hindsight, extending the canvas of the original, first, and then moving to Topaz might have been a better workflow.

The Chase

This image is very photo-realistic, right down to the ground-level, wide angle viewpoint of the "camera." The lead wolf, closest to the camera looks great, but the wolf pack to the left were distorted when closely inspected. I loved everything about this image except for that detail.

I decided it would be great to add blur to those wolves, in order to mask the defects but also add a sense of urgency/tension to the image.

In Photoshop, I used Select Subject to select and copy the three wolves to a new layer. Then, on the background layer, I used the marquee tool and Generative Fill to remove the wolves. Next, on the new wolf layer I applied a motion blur filter to the wolves giving that sense of speed and oncoming danger. While not shown below, I also used the Camera Raw filter to color grade the image.

The Attack

Generative AI can "hallucinate,"; depending on the text prompt details (or lack of), you can get some bizarre, often humorous results. Take this prompt, for example:

"fallen man, fighting off fierce wolf at night in forest, realistic, photo, hackles, growling, winter, deep snow, fierce, hungry,"

Say no more, right? Even disregarding the wacky lighting, they're just weird images. Again, the AI can't choose for you. It's not giving you recommendations; it's providing variations based on its training set and your prompt. You are the final arbiter.

I got a little closer by using the following prompt, but I didn't like Mr. Crazy Man, who seemed to be yelling at the wolf. And the wolf seemed oblivious to him as well.

"man on ground, defending against fierce wolf at night in forest, realistic, photo, hackles, growling, winter, deep snow, fierce, hungry."

However, I saw the potential here; this was perhaps the most ferocious depiction of a wolf that I had received so far. And I had close to 50 or more saved variations of "fierce" wolves.

I decided to use a combination of Topaz GigaPixel to give me a better quality, slightly larger image to work with and Generative Fill in Photoshop to remove the man. Adding the color grading was the icing on the cake for this terrifying image.

Sky View

Sometimes, the changes you need are subtle. I wanted the effect of lying on the ground and looking up at the stars in a forest. Firefly did a great job, overall! However, that gap at the top of the frame just bothered me, visually. So, using Generative Fill (in Firefly this time), I was able to paint the empty area and tell Firefly to give me another tree top. Lighting, perspective, all a good match. Job done.

Tip: The term, Generative Fill, is Adobe's branded description for what is referred to as "in-painting."

Tip: The term, Generative Fill, is Adobe's branded description for what is referred to as "in-painting."

Searching

In one scene, my protagonist has to venture out into the deep forest, searching for the old man. I tried a variety of prompts, all subtly different, but often got too many snow tracks and not enough trees. I settled on the image you see below and used Generative Fill in Firefly to do the following:

- remove burning tree branches (Firefly was hallucinating)

- add trees to the foreground (to increase the sense of depth)

- add more trees in the background

- remove some of the tracks in front and to the side of the character.

When I compare these two images, I'm struck by how much impact these simple changes have on the "story" of the image. The meaning, the mood are both totally altered.

Transformation

In the second-last scene of the story, the old man transforms into an angel. This was not the easiest image to generate to my satisfaction. Oddly enough, in some variations, the wings were even on the wrong side of the body.

Angel images that I saved to Favorites in Firefly

In my preferred option, the original Firefly version had the old man standing in the middle of a dirt road in the forest with his wings (every time a bell rings...). Generative Fill took care of the road easily enough, matching perspective and even adding appropriate shadows based on the rest of the image, but I also wanted short sequence illustrating his "transformation." This meant some subtractive work was required. Removing those wings with traditional tools (Clone Stamp, Content Aware Fill), would have been a task beyond my skill - or patience - level. However, a couple rough selections with the lasso tool, and an empty text prompt field... Voliá!

Gen AI is not always "handy."

It's a sad fact that Gen AI often struggles with limbs and, well, digits on those limbs. If you look closely at the series above, you'll notice the hands appear more like flippers, with three large digits, somewhat akin to Danny DeVito's Penguin character in the Tim Burton Batman movie.

Yep, you're not getting that vision out of your head anytime soon. You're welcome.

At first I was just going to let it go (hey, it's an an illustration, right?) But I decided - during the writing of this article - to see if Gen Fill in Photoshop could do a better job of the hands. Well, it took a few generations, but I did actually get better hands. Not perfect, by any means, but far less flipper-like.

A Trick of the Eye

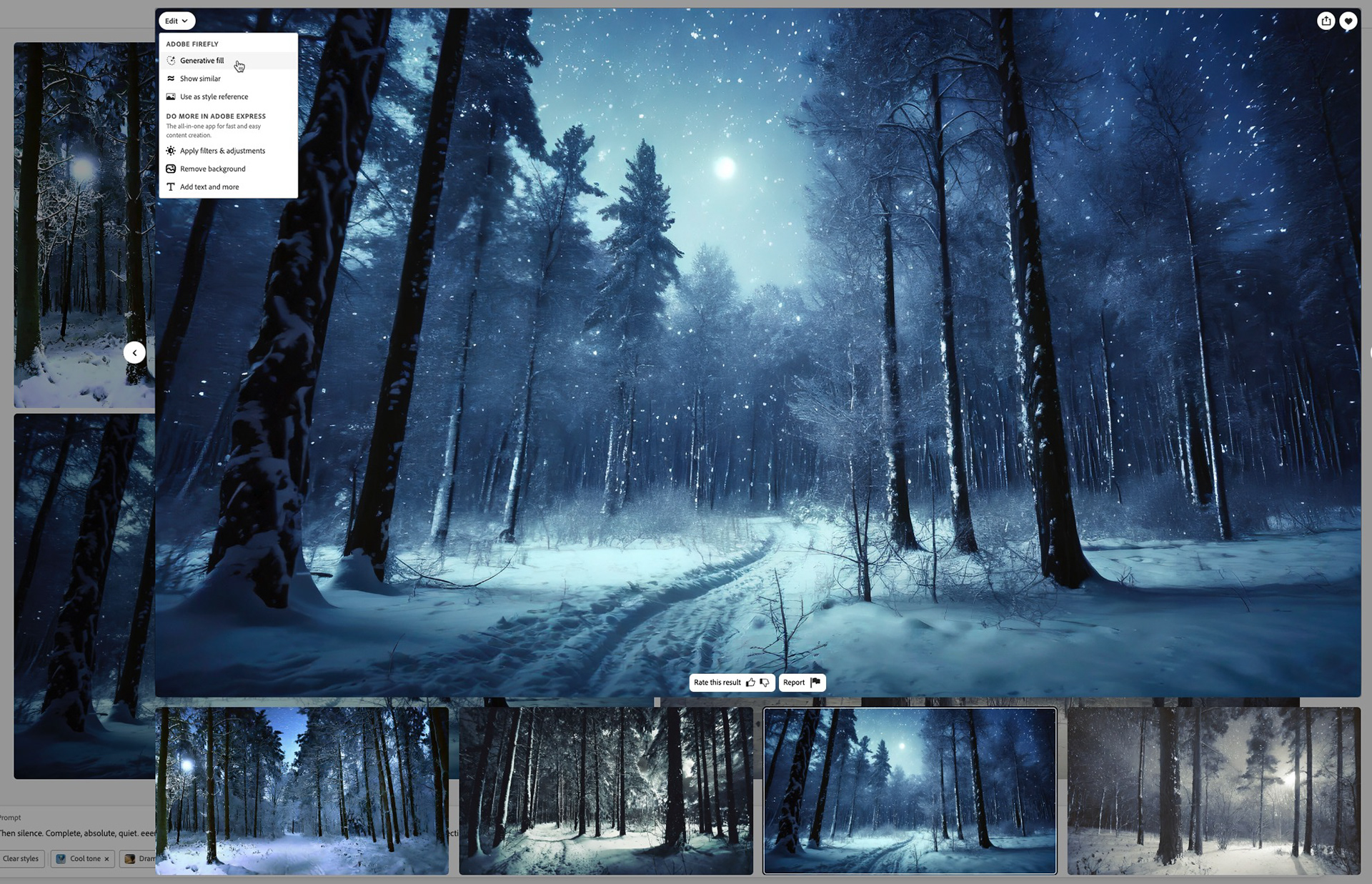

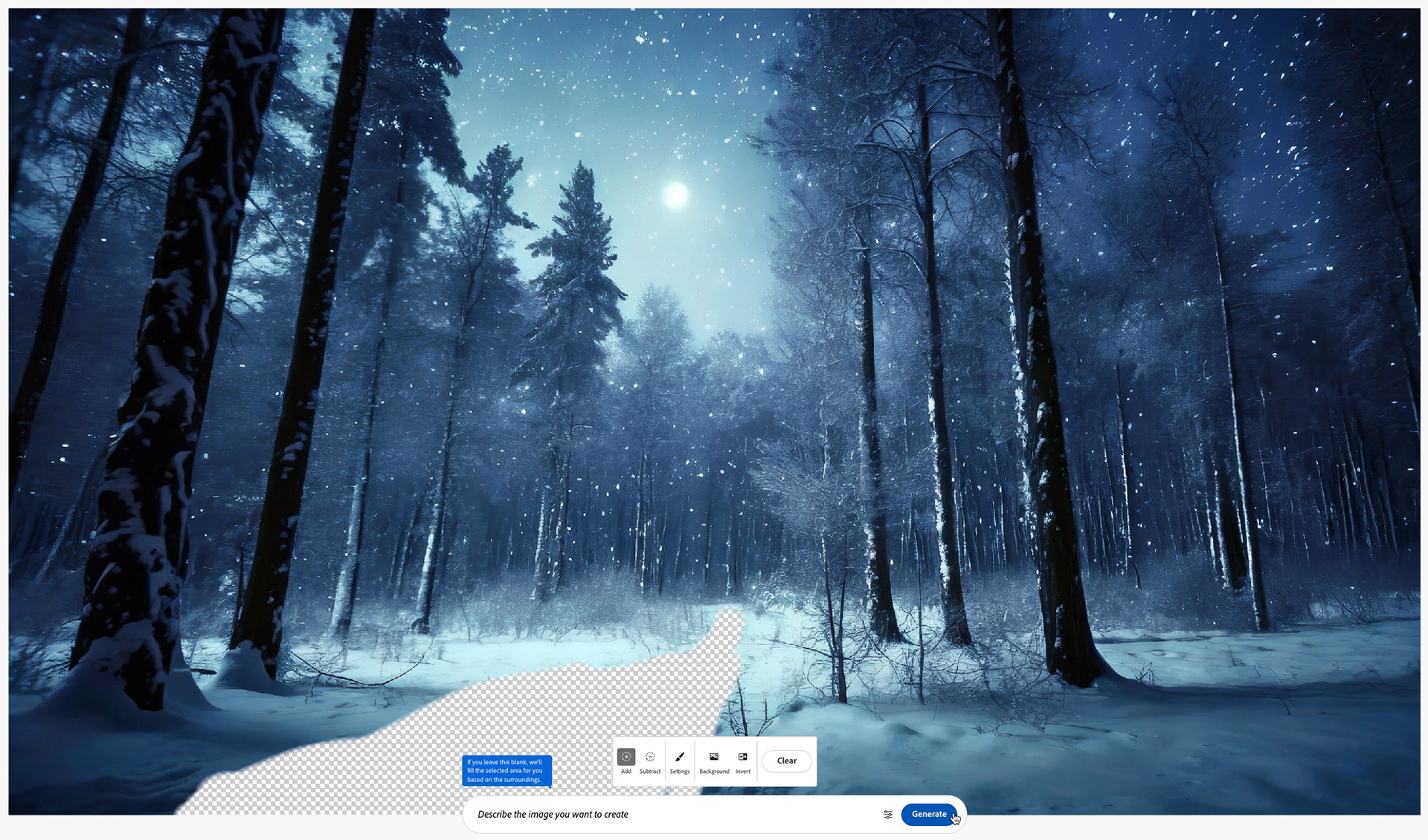

As my protagonist searches for the old man, he is in constant fear of being tracked down by a pack of wolves. At one point he thinks he sees something out of the corner of his eye. Movement, a shadow, a trick of the eye...or is it?

Sometimes, it's easier to build your AI-generated scene in pieces. I wasn't having much luck when I asked for the wolf silhouette and the dark wooded scene in winter. So I opted to to have Firefly create the main scene, first. In general, I liked the results, but Firefly seemed to have this thing about placing a path in my landscape images, when all I wanted was clean, unmarked snow.

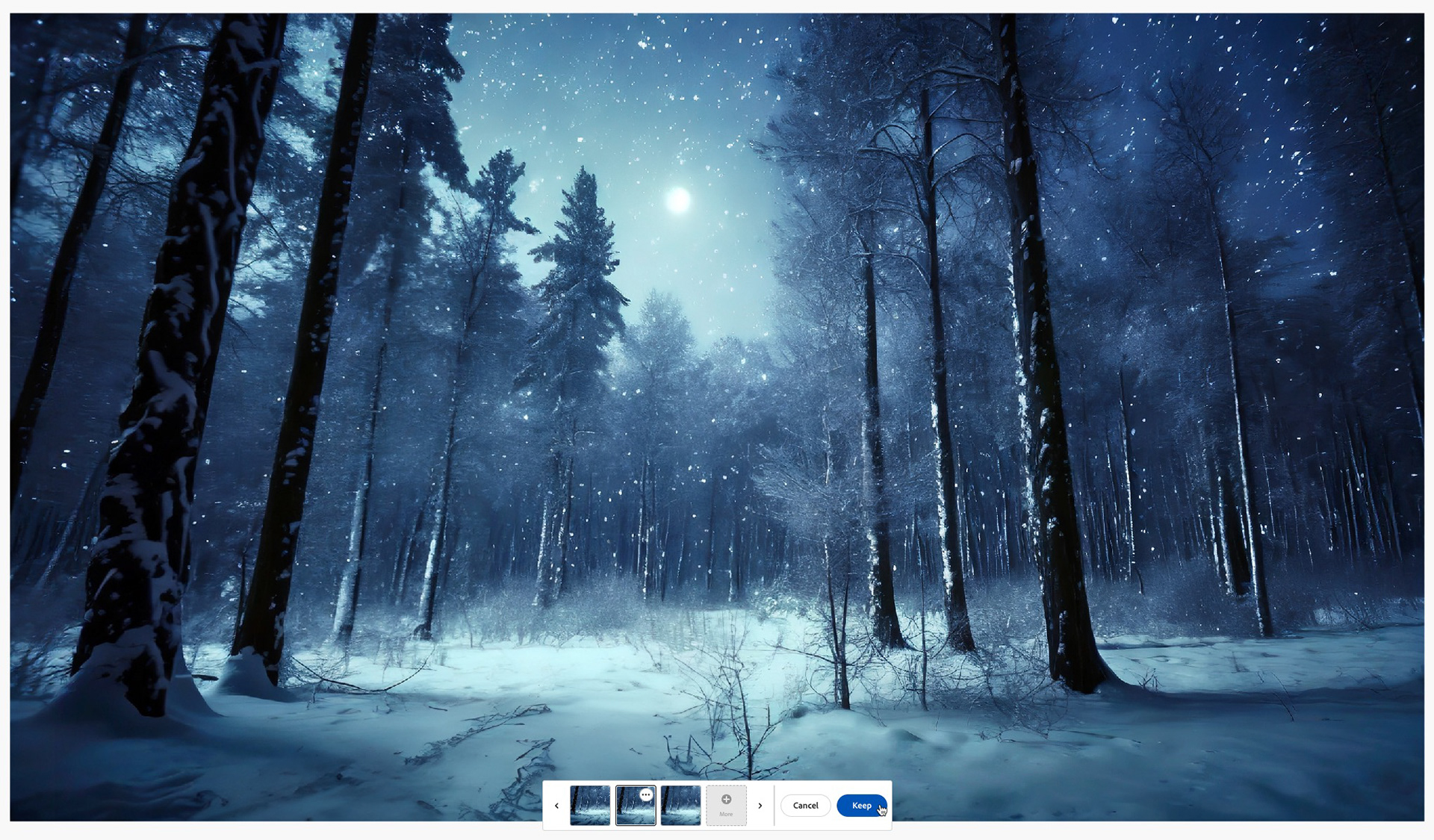

I've mentioned it already, but it's very easy to use Generative Fill right within Firefly itself, by clicking on the Edit button at the top left of any image, and choosing Generative Fill. This launches an editing window where you use a brush to paint over area you want to affect. You can add a text prompt or just click Generate to create variations. For the path, I just let Firefly do its thing and I got three acceptable results on the first try.

When you see something you like, just click Keep. Otherwise, you can generate more variations, or choose cancel and start over with your painted selection, even modifying the selection if you choose.

The next step was to add the silhouette of the wolf. Using the same technique as above, but this time incorporating a text prompt, I managed a few acceptable results. The silhouette was still "off," though for a few reasons:

- The wolf was missing a shadow underneath its body

- The wolf was missing a leg in the version I liked

- The silhouette was too clean, too sharp

I knew those edits would be easier to achieve in Photoshop. So, I downloaded the scene and brought it into the application. As I did for the Chase image, I used Select Subject to place the wolf shape on its own layer. Then I created a very simple shadow used a feathered ellipse selection, filled with a sampled color from the shadows in the forest. To add a new leg, I used the lasso tool to select the visible foreleg, copied it and pasted it into a new layer. I rotated the leg slightly with the Transform tool.

Lastly, I combined all the layers into a new, merged layer (Shift+Option+Command+E or Shift+Alt+Ctrl+E), converted the new layer to Smart Object and opened that into the Camera Raw Filter. All I did in Camera Raw was apply a small amount of grain to the image, to help everything blend together more smoothly. This helps with a variety composites and it's a good idea to view your artwork at 100% when you're experimenting with grain.

Wrap Up

And that's it, faithful reader. Thank you for hanging in with me on this journey. I hope you have found this workflow breakdown helpful, and more importantly, you've seen how you can incorporate Generative AI into your own creative process and not feel like you're signing up for a SkyNet subscription, or selling your innovative soul.

Remember, too, what AI helps you create is just collection of pixels; you can utilize any of your Photoshop skills to further enhance or embellish those images to get exactly what you want. Consider tools like Sky replacement to get a better sky, or Neural Filters like Smart Portrait to touch up an AI-generated face, or Landscape Mixer to change an image from day to night, or summer to winter, or Depth Blur to adjust the focus point in a scene. What you do is only limited by your own creativity.

Before and after applying the Landscape Mixer Neural Filter and masks to achieve more of a night time look.

Generative AI, while powerful, amazing and seemingly magical at times, is - and should be treated as - just another tool in your creative tool box. I know some - perhaps many - don’t feel that way. But this technology isn’t going away, and it’s only going to get better.

Let’s spend time learning how to make use of it so there is no negative impact to our professional lives. Let’s become go-to visionary resources for our customers as they flounder their way through this rapidly evolving AI space. We become their anchor in a binary sea. Let’s become “prompt whisperers,” for them. And for us, let’s find ways to reduce the drudgery of a project so we can focus on creating more, maybe even making things we never thought possible!

Ok, getting off my soapbox now. :-)

To save you having to scroll back to the top, once again, here is the link to my Christmas Story, A Midnight Clear, or feel free to read the story, embedded below.

I do hope you enjoy the story, and that you share it with friends, family, and anyone who you feel will benefit from reading it or listening to it.

Until next time, Merry Christmas, and Happy New Year!